Feast Launches Support for Vector Databases 🚀

Feast and Vector Databases With the rise of generative AI applications, the need to serve vectors has grown quickly. We…

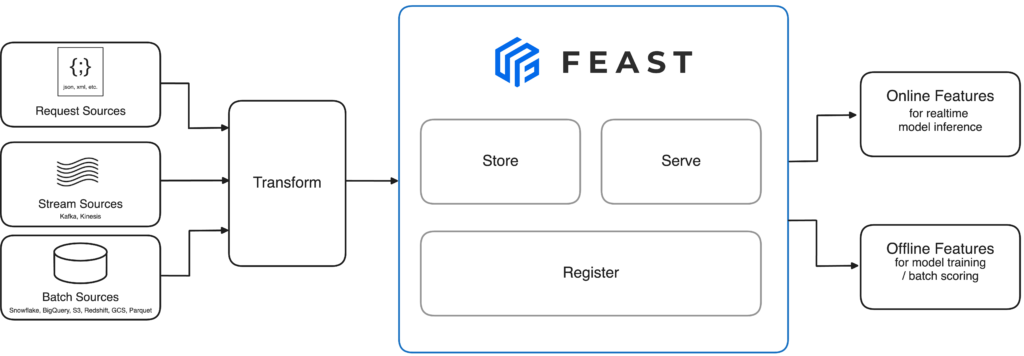

Feast is a standalone, open-source feature store that organizations use to store and serve features consistently for offline training and online inference.

Answer your organization’s feature storing and serving needs with Feast’s customizable open-source feature store.

No. Users cannot natively upgrade their Feast environment to a managed Feature Platform like Tecton. However, Tecton does support importing Feast feature repositories. Feast users can also use this script to convert Feast objects into Tecton objects.

Feast users looking to transition to a managed feature platform like Tecton should also note that because Feast does not support transformations, they will need to either rewrite their transformations in Tecton or have Tecton ingest their features (e.g., via FeatureTable or Tecton’s Ingest API).

Finally, migrating from Feast to Tecton is not a self-serve experience: anyone who wishes to do so will need to work with sales to get MFT access, do initial integration work/load tests, security evals, etc. Tecton still needs to do cluster installs before users can get started on their projects.

No. Feast is not the unmanaged version of Tecton. Tecton is NOT built on Feast. Tecton and Feast have independent roots, were started by different companies, and built on different architectures. Feast is an open-source feature store, and Tecton is a managed feature platform.

We wrote an article on this! What is a Feature Store?

A feature store serves two purposes:

A feature platform is a system that orchestrates existing data infrastructure to continuously transform, store, and serve data for operational or real-time machine learning applications. A feature platform, of which the feature store is a core component, covers the whole feature lifecycle:

Partially. Feast enables on-demand transformations to generate features that combine request data with precomputed features (e.g. time_since_last_purchase), with plans to allow light-weight feature engineering.

Many users use Feast today in combination with a separate system that computes feature values. Most often, these are pipelines written in SQL (e.g. managed with dbt projects) or a Python Dataframe library and scheduled to run periodically.

If you need a managed feature store that provides feature computation, check out Tecton.

Feast is a Python library + optional CLI. You can install it using pip.

You might want to periodically run certain Feast commands (e.g. `feast materialize-incremental`, which updates the online store.) We recommend using schedulers such as Airflow or Cloud Composer for this.

For more details, please see the quickstart guide

Feast supports data sources in all major clouds (AWS, GCP, Azure, Snowflake) and plugins to work with other data sources like Hive.

Feast also manages storing feature data in a more performant online store (e.g. with Redis, DynamoDB, Datastore, Postgres), and enables pushing directly to this (e.g. from streaming sources like Kafka).

See more details at third party integrations

For guidance on how to structure your feature repos, how to setup regular materialization of feature data, and how to deploy Feast in production, see our guide Running Feast in Production

Feast is designed to work at scale and support low latency online serving. We support different deployment patterns to meet different operational requirements (see guide)

See our benchmark post (which comes with a benchmark suite on GitHub). In benchmarks, we’ve seen single entity p99 read times to be <10 ms with a Python feature server on Redis and <4 ms with a Go feature server. Feast also is performant (p99 < 20ms in benchmarks) in batch online retrieval.

No. Feast is a tool that manages data stored in other systems (e.g. BigQuery, Cloud Firestore, Redshift, DynamoDB). It is not a database, but it helps manage data stored in other systems.